Introduction

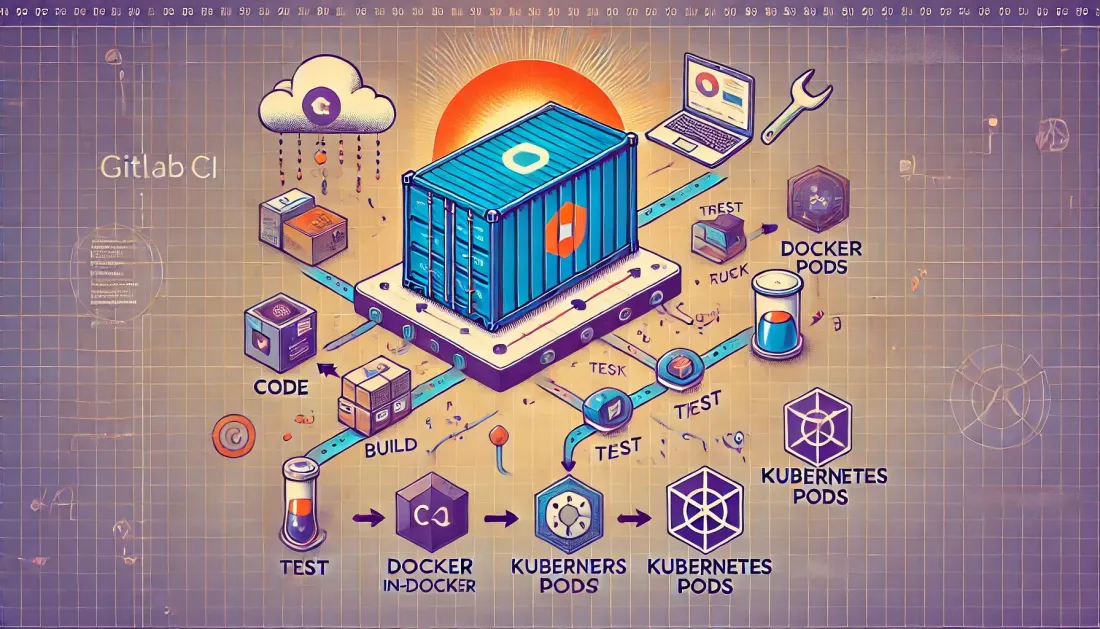

In the fast-paced world of DevOps and cloud-native applications, continuous integration and deployment (CI/CD) pipelines are the backbone of efficient software delivery. GitLab CI, Docker, and Kubernetes have emerged as powerful tools in this ecosystem, each bringing its own strengths to the table. However, combining these technologies to create a seamless build and deploy pipeline can be challenging, especially when it comes to building Docker images within a Kubernetes environment.

GitLab CI offers robust automation capabilities, Docker provides consistent and portable environments, and Kubernetes excels at orchestrating containerized applications. The challenge lies in bridging these technologies effectively, particularly when building Docker images as part of your CI pipeline running in Kubernetes.

Traditionally, tools like Kaniko have been used to build Docker images without requiring a Docker daemon. While Kaniko is a powerful solution, it's not always the best fit for every scenario. In this article, we'll explore an alternative approach: using Docker-in-Docker (DinD) with GitLab CI's Kubernetes executor to build Docker images directly within your Kubernetes cluster.

This method leverages GitLab Runner's Kubernetes executor and the Docker-in-Docker technique to create a flexible and powerful build environment. By the end of this article, you'll understand how to:

- Set up GitLab Runner with the Kubernetes executor

- Implement Docker builds in your GitLab CI pipeline

- Build and push Docker images from within Kubernetes

Let's dive in and explore how to harness the power of GitLab CI, Docker, and Kubernetes to create an efficient and scalable build pipeline.

2. Understanding the Docker-in-Docker (DinD) Approach

Docker-in-Docker (DinD) is a technique that allows you to run Docker inside a Docker container. This approach is particularly useful in CI/CD environments where you need to build Docker images as part of your pipeline, but you don't have direct access to the host's Docker daemon.

How Docker-in-Docker Works

In a typical Docker setup, containers interact with the Docker daemon running on the host machine. With DinD, we create a container that has its own Docker daemon. This container can then be used to build, run, and manage other Docker containers independently of the host system.

The process works as follows:

- A Docker container is started with its own Docker daemon.

- This container has the ability to create and manage other Docker containers and images.

- Within your CI/CD pipeline, you interact with this containerized Docker daemon to build your application images.

Advantages of Docker-in-Docker

- Isolation: DinD provides an isolated environment for building and testing Docker images, reducing potential conflicts with other processes on the host system.

- Flexibility: It allows you to use Docker commands in your CI/CD pipeline without needing to modify the host system or rely on shared Docker resources.

- Security: By isolating the Docker build process, you can potentially enhance security by limiting the impact of compromised build scripts.

- Consistency: DinD ensures that your build environment is consistent across different CI/CD runners, as it's contained within a Docker image itself.

Potential Drawbacks and Considerations

- Performance: Running Docker inside Docker can have some performance overhead compared to using the host's Docker daemon directly.

- Complexity: DinD adds another layer to your CI/CD setup, which can increase complexity and potential points of failure.

- Storage: Each DinD container has its own storage, which means you may need to manage caching and cleanup more carefully to avoid excessive disk usage.

- Security Considerations: While DinD can enhance security through isolation, it also requires running containers with elevated privileges, which should be carefully managed.

DinD in GitLab CI and Kubernetes

In the context of GitLab CI and Kubernetes, we'll be using DinD to create a Docker build environment within our Kubernetes-based CI runners. This approach allows us to leverage Kubernetes for scaling our CI/CD infrastructure while still maintaining the flexibility to build Docker images as part of our pipeline.

By understanding the DinD approach, we can better appreciate how it enables us to build Docker images within a Kubernetes environment, providing a powerful alternative to tools like Kaniko.

3. Setting Up the Kubernetes Executor

The Kubernetes executor for GitLab Runner allows you to use Kubernetes to run your CI/CD jobs. This section will guide you through the process of setting up and configuring the Kubernetes executor to support Docker-in-Docker (DinD) builds.

3.1 Overview of GitLab Runner's Kubernetes Executor

The Kubernetes executor creates a Pod for each GitLab CI job. This Pod contains the following containers:

- A helper container to handle Git operations

- A container for the CI job itself

- Additional service containers as needed (in our case, a Docker daemon container)

3.2 Prerequisites

Before setting up the Kubernetes executor, ensure you have:

- A running Kubernetes cluster

kubectlinstalled and configured to access your cluster- Helm installed (optional, but recommended for easier installation)

3.3 Installing GitLab Runner in Your Kubernetes Cluster

You can install GitLab Runner using Helm or by applying Kubernetes manifests directly. Here's how to do it using Helm:

-

Add the GitLab Helm repository:

helm repo add gitlab https://charts.gitlab.io -

Install GitLab Runner:

helm install --namespace gitlab gitlab-runner gitlab/gitlab-runner \ --set gitlabUrl=https://gitlab.com/ \ --set runnerRegistrationToken=<your-runner-token>

Replace <your-runner-token> with your actual GitLab runner registration token.

3.4 Configuring the Executor for DinD

To enable Docker-in-Docker builds, you need to modify the GitLab Runner configuration. Create a values.yaml file with the following content:

gitlabUrl: https://gitlab.com/

runnerRegistrationToken: "<your-runner-token>"

runners:

config: |

[[runners]]

[runners.kubernetes]

image = "ubuntu:20.04"

privileged = true

[[runners.kubernetes.volumes.empty_dir]]

name = "docker-certs"

mount_path = "/certs/client"

medium = "Memory"

Apply this configuration:

helm upgrade --install --namespace gitlab gitlab-runner gitlab/gitlab-runner -f values.yaml

3.5 Explaining the Configuration

privileged = true: This allows the runner to use Docker-in-Docker.volumes.empty_dir: This creates a shared volume for Docker certificates.services: This specifies the Docker-in-Docker service container.helpers.image: This sets the GitLab Runner helper image.

3.6 Verifying the Setup

To verify that your Kubernetes executor is set up correctly:

- Go to your GitLab project's CI/CD settings.

- Check that the runner is registered and active.

- Run a simple pipeline to test the setup.

With this configuration, your GitLab Runner in Kubernetes is now ready to handle Docker-in-Docker builds. In the next section, we'll look at how to implement Docker builds in your GitLab CI pipeline using this setup.

4. Implementing Docker Builds in GitLab CI

Now that we have our Kubernetes executor set up with Docker-in-Docker capabilities, let's implement Docker builds in our GitLab CI pipeline. This section will guide you through creating a .gitlab-ci.yml file, defining stages and jobs, and configuring the Docker service.

4.1 Creating a .gitlab-ci.yml File

In the root of your project, create a file named .gitlab-ci.yml. This file will define your CI/CD pipeline.

4.2 Defining Stages and Jobs

Let's start with a basic structure for our pipeline:

stages:

- build

- test

- deploy

variables:

DOCKER_TLS_CERTDIR: ""

build:

stage: build

image: docker:20.10.16

services:

- docker:20.10.16-dind

before_script:

- docker info

script:

- docker build -t my-app:$CI_COMMIT_SHA .

- docker push my-app:$CI_COMMIT_SHA

test:

stage: test

script:

- echo "Run your tests here"

deploy:

stage: deploy

script:

- echo "Deploy to Kubernetes here"

4.3 Explaining the Configuration

Let's break down the key components of this configuration:

stages: Defines the pipeline stages: build, test, and deploy.variables:DOCKER_TLS_CERTDIR: "": This disables TLS for the Docker daemon, which is fine for CI/CD environments but not recommended for production.

buildjob:image: docker:20.10.16: Uses the Docker image as the base for this job.services: - docker:20.10.16-dind: Specifies the Docker-in-Docker service.before_script: Runsdocker infoto verify the Docker setup.script: Contains the Docker build and push commands.

4.4 Configuring the Docker Service

The services section in the build job is crucial for enabling Docker-in-Docker:

services: - docker:20.10.16-dind

This starts a Docker daemon inside the job's container, allowing us to use Docker commands in our script.

4.5 Building the Docker Image

In the script section of the build job, we use standard Docker commands:

script: - docker build -t my-app:$CI_COMMIT_SHA . - docker push my-app:$CI_COMMIT_SHA

docker build: Builds the image using the Dockerfile in your project root.$CI_COMMIT_SHA: A GitLab CI variable containing the commit SHA, used here as the image tag.docker push: Pushes the built image to a registry (you'll need to configure authentication, which we'll cover in the next section).

4.6 Adding Authentication for Docker Registry

To push images, you'll need to authenticate with your Docker registry. Add these lines to your build job:

before_script: - docker info - echo "$DOCKER_PASSWORD" | docker login -u "$DOCKER_USERNAME" --password-stdin $DOCKER_REGISTRY

Make sure to set DOCKER_USERNAME, DOCKER_PASSWORD, and DOCKER_REGISTRY as CI/CD variables in your GitLab project settings.

With this configuration, you now have a mimimal GitLab CI pipeline that can build Docker images using Docker-in-Docker within a Kubernetes environment.

7. Conclusion

Throughout this article, we've explored a powerful approach to building Docker images and deploying them to Kubernetes using GitLab CI, all without relying on Kaniko. Let's recap the key points we've covered:

- We started by understanding the Docker-in-Docker (DinD) approach, which allows us to build Docker images within our CI/CD pipeline running in Kubernetes.

- We then set up the Kubernetes executor for GitLab Runner, configuring it to support DinD builds.

- We implemented Docker builds in our GitLab CI pipeline, creating a

.gitlab-ci.ymlfile that defines our build, test, and deploy stages.

This approach offers several advantages:

- Flexibility: By using DinD, we maintain the full capabilities of Docker in our build process, allowing for complex multi-stage builds and custom Docker commands.

- Familiarity: For teams already comfortable with Docker, this method leverages existing knowledge and tools.

- Integration: This setup integrates seamlessly with GitLab CI and Kubernetes, creating a smooth workflow from code commit to deployment.

However, it's important to note that this method isn't without its considerations:

- Resource Usage: Running Docker inside Docker can be more resource-intensive than alternative methods like Kaniko.

- Security: While we've discussed security best practices, running containers in privileged mode (as required for DinD) needs careful management.

As with any CI/CD solution, the key to success is continuous improvement. Regularly review your pipeline, gather feedback from your team, and stay updated with the latest best practices in the rapidly evolving world of DevOps.

Remember, the goal of CI/CD is to enhance your team's ability to deliver value to your users. Whether you choose this DinD approach, Kaniko, or another method, the most important factor is that it meets your team's needs and helps you deliver high-quality software efficiently and reliably.

By mastering these techniques, you're well-equipped to build robust, efficient CI/CD pipelines that can handle complex Docker builds and Kubernetes deployments. As you implement and refine this process, you'll be contributing to a faster, more reliable software delivery lifecycle for your organization.